Saturday, June 28, 2008

Why not adopt the Google User Experience Team's design principles for off-the-desktop digital interactions?

If you haven't noticed yet, interactive urban (and suburban) screens of all shapes and sizes are found outside the home and workplace, in places we've come to expect, and in unexpected places. Our cell phones have morphed into web browsers, with larger, higher resolution screens.

The web and the real world have become intertwined.

But often, it is not pretty.*

It is as if all of the blocked pop-ups, banner ads, and fast-forwarded commercials escaped, and found new homes on the screens we find staring at us in airports, malls, arenas, and big-box store aisles!

Is it possible for user experience principles to enhance our digital lives beyond our laptops and desktops?

A recent post on the Putting People First blog outlines some great advice from the Google User Experience Team that holds true for off-the-desktop applications, including multi-touch displays, digital signage, kiosks, mobile devices, and anything under the umbrella of DOO- digital out-of-home:

"The Google User Experience team aims to create designs that are useful, fast, simple, engaging, innovative, universal, profitable, beautiful, trustworthy, and personable. Achieving a harmonious balance of these ten principles is a constant challenge. A product that gets the balance right is “Googley” – and will satisfy and delight people all over the world."

The ten principles that contribute to a “Google User Experience” are:

1. Focus on people – their lives, their work, their dreams

2. Every millisecond counts

3. Simplicity is powerful

4. Engage beginners and attract experts

5. Dare to innovate

6. Design for the world

7. Plan for today’s and tomorrow’s business

8. Delight the eye without distracting the mind

9. Be worthy of people’s trust

10. Add a human touch

*Previous posts highlighting usability problems - and other things not pretty - related to off-the-desktop user experience:

Off-the-desktop musings about future interactions: User experience, user-driven design, Universal Usability, Airports, and the "Internet of Things"

Usability/Interaction Hall of Shame

Not-so-useful Information Displays in a University Building

Technology-Supported Shopping and Entertainment: User Experience at Ballantyne Village - "A" for Concept, "D" for Touch-screen Usability

Friday, June 27, 2008

Off-the-desktop musings about future interactions: User experience, user-driven design, Universal Usability, Airports, and the "Internet of Things"

My vision for technology supported human-world interaction is much different than what I see cropping up in public spaces, despite emerging technological concepts such as the Internet of Things and Locative Media

My vision for technology supported human-world interaction is much different than what I see cropping up in public spaces, despite emerging technological concepts such as the Internet of Things and Locative MediaIf you are familiar with my blogs, you know that I maintain an on-going quest for off-the-desktop examples for my "Usability Hall of Fame". While I'm out and about, I often meet with disappointment, especially when I come across large displays or interactive touch screens.

The video clip below, taken on June 26th at the Detroit Metro Airport, is an example of what I mean. I was excited to encounter a touch screen information kiosk. I was not impressed with the clunky look and feel of the application. It was more like a point and click web-page than an innovative interactive information portal.

If you look closely, you'll see that the weather posted on the screen did not accurately represent the near 90-degree heat that I encountered that day. The weather information must not have been updated!

This kiosk was located in a new terminal. Although there were many information displays dotted around, during the two hours that I was at the airport, I never once saw anyone use them.

It is sort of difficult to use a touch screen when there is a garbage can obstructing the interaction.

It is not possible to interact with an interactive touch screen that is not working.

I did see people take a look at the more traditional, non-interactive displays that were located in the center walkways:

Last week, when I went to find my baggage at the Detroit Metro Airport, I noticed three kiosks on my way to pick up my luggage. At the time, I didn't know where I needed to go to retrieve my bags, and I wasn't sure where I needed to go to take the shuttle to the rental car lot. I was a bit puzzled to find these kiosks in this spot, since they did not provide the kind of information that most people coming through this section of the airport would need.

The above information kiosks were situated in a row of three, one representing each of the counties that make up the Detroit metropolitan area. Since the kiosks were located at the foot of the escalators leading to the baggage claim section of the airport, people stopping to interact with the kiosks would most likely cause a minor traffic jam. Although the kiosks were in plain view, it was not difficult to see why they were not used, or even noticed.

- Most people who are on their way to the baggage claim area are focused on getting their luggage and heading out. They may not be in the mood to learn all that they can about the finer points of Macomb, Wayne, or Oakland counties from talking head infomercials.

- Although the kiosks provided a reasonable amount of screen "real estate", the design of the applications allowed for no innovative touch-screen interaction. The applications were displayed on touch-screen surfaces, with the upper section dedicated to video and the lower section activated for touch. This design provided only one type of touch interaction, a small area to touch in order to activate the video.

- The displays with information about baggage carousels were located in a spot out of view from this location.

Since I'm obsessed with displays, as soon as I found my bag, I walked up closer to see what was so important about this huge sign. Not until I got right up to the surface did I notice that it offered some interactivity:

If you happen to have your cell phone handy, you can get free sync ringtones from the display area while you wait for your bags, if you happened to notice this at first glance. Since I was carrying my purse and pulling a suitcase and smaller carry-on bag, I was in no position to fumble around for my phone to get a new ringtone. It wasn't a convenient location. Where could I set down my purse? What if I had kids in tow?

Obviously, the designers of this set-up thought that it would be a good idea to offer people the chance to download ringtones as they waited for their luggage. What did the designers forget? Perhaps they did not look at the bigger picture long enough to think through a workable, useful user experience.

It would make better sense to provide ringtones at a more convenient location in the airport, such as the waiting areas or around the food courts. A thoughtful re-design of this display would encourage this interaction in a way to let people know intuitively that a ringtone service was available.

After I left the baggage area, I headed to an information display that consisted of a small touch screen, an array of small lighted displays, and phones to find out how to get to the shuttle to the rental car lot. Again, I was not impressed. The applications were a bit more visually appealing than the displays in the terminal, but the touch screen interaction was clunky.

Related:

To get a better understanding of my message and the importance of looking at bigger picture issues such as user-experience, user driven design, and emerging off-the-desktop technologies, take some time to look over the work and writings of people like Julian Bleeker, Chris O'Shea, and others who are thinking along similar paths. Also check the resource links on the sidebar of this blog.

Below are a couple of interesting quotes from Julian Bleeker:

(Julian Bleeker is from the Near Future Laboratory, a design, development and research consultancy.)

"Forget about the Internet of Things as Web 2.0, refrigerators connected to grocery stores, and networked Barcaloungers. I want to know how to make the Internet of Things into a platform for World 2.0. How can the Internet of Things become a framework for creating more habitable worlds, rather than a technical framework for a television talking to an reading lamp? Now that we've shown that the Internet can become a place where social formations can accrete and where worldly change has at least a hint of possibility, what can we do to move that possibility out into the worlds in which we all have to live? " - Julian Bleeker, Ph.D: Why Things Matter: A Manifesto for Networked Objects: Cohabiting with Pigeons, Arphids and Aibos in the Internet of Things (pdf)

"At its core, locative media is about creating a kind of geospatial experience whose aesthetics can be said to rely on a range of characteristics ..from the quotidian to the weighty semantics of lived experience, all latent within the ground upon which we traverse". -Julian Bleeker; Jeff Knowlton Locative Media: A Brief Bibliography and Taxonomy of GPS-Enabled Locative Media Leonardo On-line Electronic Almanac

Coincidence?

Julian Bleeker spends time contemplating interactions while he's in airports: Time Spent, Well

Chris O'Shea's blog, Pixelsumo, "is a blog about play, exploring the boundaries of interaction design, videogames, toys, and playgrounds. Chris is a resident of somth;ng labs, a shared studio space full of creatives who incorporate technology into their work.

- SURFACES: Chris O'Shea's posts and links pertaining to interactive surfaces, displays, touch-screens, and multi-touch.

- PLAYGROUNDS: Posts and links about technology-supported playgrounds

Airport Technology for the Usability Hall of Fame!

Embedded Information: Airport

Thursday, June 19, 2008

Multi-touch Interaction Design Techniques: Links to Resources from AVI 2008 - Advanced Visual Interfaces Conference

ACM-SIGCHI, ACM-SIGMM, and SIGCHI recently held the AVI 2008: Advanced Visual Interfaces conference in Naples, Italy. Topics covered included interaction models and techniques, interactive visualization, interactive systems, and user interaction studies.

I found the information linked below worth exploring:

Workshop on Designing Multi-touch Interaction Techniques for Coupled Public and Private Displays pdf

Slides and videos from the workshop

From the conference website:

"This workshop will specifically focus on the research challenges in designing touch interaction techniques for the combination of small touch driven private input displays such as iPhones coupled with large touch driven public displays such as the Diamondtouch or Microsoft Surface."

Topics of Interest

- Understanding the design space and identifying factors that influence user interactions in this space

- The impact of social conventions on the design of suitable interaction techniques for shared and private displays

- Exploring interaction techniques that facilitate multi-display interfaces

- Personal displays as physical objects for the development of interaction techniques with shared multi-touch displays

- Novel interaction techniques for both private and public multi-touch devices as part of multi-display environments

- Techniques for supporting input re-direction and distributing information between displays

- Developing evaluation strategies to cope with the complex nature of multi-display environments

- Ethnography and user studies on the use of coupled public and private display environments"

Call for Papers for Personal and Ubiquitous Computing Special Issue

Pictures from the Multi-touch Interaction Workshop Publication:

Interesting Technology: InfiniTouch Expands the Concept of Touch-Screen Interaction

I just learned about an interesting form of touch-technology that works on a variety of surfaces and materials. Someone from the company behind InfinityTouch left a message on one of my blog posts, and thought readers would be interested in learning more about it. I agree. Here is the link the post: Hands On Computing: How Multi-Touch Screens Could Change the Way we Interact with Computers and Each Other (link to Scientific American Article)

Here are a couple of videos about this technology, called InfiniTouch, by QSI Corporation.

Force Panel Touch Screen Gas Pump

In this example, items can be ordered and paid for from the pump, such as a DVD, food, coffee drinks.

InfinitiTouch Music Kiosk

This explains how the technology behind the kiosk works. The material is rugged and weather-proof. The interesting thing with this technology is that the touch-sensitivity is not limited to the screen. The rest of the surface can be touch-enabled, even the frame!

According to an article about InfiniTouch from 2007,on the eMediaWire website, InfiniTouch has many useful features:

- - Capable of dynamic self calibration

- - Scalable to any size, proven up to 1.2 meters

- - Supports two-sided display

- - Usable with various elevations: flat plane, curved surface, 3-D surface,projected surface, or a surface with holes, such as a speaker

- - Highly light transmissive

- - Touchable with a finger, gloved hand, stylus, or other object

- - Operable when covered with ice, water, dust or debris

- - Capable of capturing signatures

InfiniTouch senses force as the z-axis. Items that raise above the frame can also be touch-enabled. It can sense touch on 3D surfaces, as well as curved and irregular surfaces. It is designed to withstand public outdoor use. At the present time, it does not support multi-touch interaction.

Additional Information:

Video: InfiniTouch Introduction (This video demonstration will give you an idea of the tactile "touchy-feely" possibilities of this technology.)

Wall Panels Video (This video provides examples of how the technology can be used in public spaces.)

How It Works

Sensing Touch by Sensing Force pdf

Wednesday, June 18, 2008

Dan Saffer's Upcoming Book: Interactive Gestures: Designing Gestural Interfaces

Dan Saffer, the author of Designing for Interaction: Creating Smart Applications and Clever Devices, is finishing up a new book about the design of gestural interfaces. This should be a great book, judging from the quality of Saffer's previous work.

Chapter 1:

Interactive Gestures: Designing Gestural Interfaces pdf

(page21 of this chapter has a picture of a NUI-group member's touch-table)

Tuesday, June 17, 2008

Emotiv System's Neural Game Controller Headset: Human-Computer Interface of the future?

The June issue of the IEEE Computer magazine arrived in my mailbox today. As I thumbed through the pages, I noticed an article about "Emotiv", neural interface in the form of a headset that serves as a game controller.

In the course of looking up information for this post, I learned that Emotiv Systems will provide an open-source SDK for developers, which is great news for me. I'm a member of IGDA's Game Access SIG, and I've taken a couple of game design courses. I'd love to see what could be done with the SDK!

Another interesting piece of news is that Emotiv is partnering with IBM to look at how this technology can be used in for 3-D applications that reach beyond gaming, such as 3D virtual social environments, training, and simulations.

Video from GDC '08:

Game Interaction via Thoughts and Facial Expressions: EPOC - Emotiv Systems Neural Interface

Scientific American 4/14/08 Demiurge website

Head Games: Video Controller Taps into Brain Waves

(Peter Sergo)

"Emotiv Systems introduces a sensor-laden headset that interprets gamers' intentions, emotions and facial expressions"

From the Scientific American article:

"By the end of this year, San Francisco–based Emotiv's sensor-laden EPOC headset will enable gamers to use their own brain activity to interact with the virtual worlds where they play. The $299 headset's 14 strategically placed head sensors are at the ends of what look like stretched, plastic fingers that detect patterns produced by the brain's electrical activity. These neural signals are then narrowed down and interpreted in 30 possible ways as real-time intentions, emotions or facial expressions that are reflected in virtual world characters and actions in a way that a joystick or other type of controller could not hope to match."

"The EPOC detects brain activity noninvasively using electroencephalography (EEG), a measure of brain waves, via external sensors along the scalp that pick up the electrical bustle in various parts of the furrowed surface of the brain's cortex, a region that handles higher order thoughts."

To see the Emotive Neuro headset in action, take a look at a video from the 1/9/08 Scientific American Video News: Emotiv's Mind Controlled Software Suite. Wearing the headset, you can make facial expressions that are replicated on the face of an on-screen avatar. You can move items around the screen through your thoughts. The Emotive system also relies on sensing the electrical activity of muscle movements.

Emotiv EPOC overview

Demiurge Studios is developing games for the Emotiv headset.

Emotiv's GDC '08 Press Release Photos:

"Total Communication" Partnership with IBM

"IBM intends to explore how the Emotiv headset may be used for researching other possible applications of Emotiv's BCI technology, including virtual training and learning, collaboration, development, design and sophisticated simulation platforms for industries such as enterprise and government." via Business & Games

Example of an application controlled by the Emotiv neural headset:

Note: There were problems with the application during the demonstration. Apparently the sound crew's AV headsets interfered with the system. This is something that needs to be tested in a variety of settings. If you live in a high-density area, you are bound to be surrounded by interference. This is why out-of-the-lab testing is so important!

Comment:

All of this intrigues me. I'm in the middle of neuropsychology training, learning the latest about neuroanatomy, brain function, and the evaluation and treatment of young people with traumatic brain injuries. I'm a member of IDGA's Game Access SIG. I'm also a school psychologist by day.

I know how much kids and teens might to explore this type of gaming. It looks like applications using this technology could be very useful to people who have a range of disabilities. What I'd like to see here is a way to connect the system to a haptic controller, like the Novint Falcon. Ideally, the system should work with other sorts of devices and systems.

I'm sure that in 2-3 years, the brain-comptuter interface will not rely on a headset. I'm thinking that the interface could work nicely in a "wearable", embedded into a "hoodie" or fashionable cap.

Update 6/18/08:

Discovery Tech: Upgrading Humans - Tracy Staedter chats with Desney Tan, Microsoft Research

"Well, we believe that there is a lot of potential to be harvested from interfacing directly with the human physiology... So we've done a lot of work with brain-computer interfaces, using brain-sensing devices to infer what's going on in our brains, which can be pretty useful in a variety of situations."

"We've also done quite a bit sensing muscles, and other parts of the body. Many of the technologies we use utilize very simple electromagnetic sensing, since the human body is a pretty prolific generator of these signals." -Desney Tan

Desney Tan (Photo from Discovery Tech)

Multi-Touch Plug-in for NASA World Wind?!

NUI group member Paul D'Intino is developing a Multi-Touch display called Orion mt. He's working on a multi-touch plug-in for NASA World Wind.

This would be a cool way of exploring the world for students who have access to multi-touch screens at school!

The plug-in is a work in progress. You can download the latest version from Paul's blog.

Paul is "Fairlane" on the NUI Group's forums.

Sunday, June 15, 2008

New iPhone Interaction: Unity Technologies 3D Game Development Tool

The new iPhone has features that are ideal for a variety of applications:

Mobile learning

Mixed Reality Gaming

Context-Aware computing

Mini "brain-games" for cognitive rehabilitation, memory support

Health care- 3D medical visualizations

Organization and contact management through "push" technology

More possibilities.....

Unity Engine to Power iPhone Gaming

Multi-touch Tables and Displays - Search for Flexible Designs: I might just have to build my own!

For more information on this topic, see my posts on the Interactive Multimedia Technology blog:

DigiBoard Multi-Touch Mixed Reality Game; Ideas for future design of a flexible, adjustable multi-touch surface..

Emerging Interactive Technologies, Emerging Interactions, and Emerging Integrated Form Factors

If you know of anyone working on an adjustable "surface" that can be positioned upright, horizontally, and at an angle, please let me know.I might just have to take my designs and build my own!

Thursday, June 12, 2008

Mathmatical Musings: " Understanding Human Mobility Patterns" - The Data Universe is Expanding

It is easy to tell from the title of my blog that I am interested in how technology impacts human interaction out in the world, beyond the confines of the desktop. Every so often, usually after reading an article from IEEE, or even Scientific American, I find myself having a math dream, where I'm trying to solve problems that have a lot of "delta", change over time. There is a need for new mathematical models to help us better understand our interactions with technology when we are out and about in our world.

.

Apple announced the next generation 3G iPhone, which will be in the hands of thousands, if not millions, by July 11th, 2008, out in the land of mobility. The new iPhone will have GPS, and provide users with interesting location-aware applications.

Just imagine the reams of data that this will generate when the multitudes are in action! How will we make sense of this? Will there be a way to ensure that this information will be personally useful? Is it something that we all will care about, if appropriately informed?

Like the child said in Annie Hall, "The Universe is Expanding!". In 2008, the data universe is expanding.

Fortunately, our universe has a handful of gifted scientists who have the drive and mathematical knowledge to think about these problems in real life. Here is a great example of what I am talking about:

Understanding Human Mobility Patterns (pdf) Marta C. Gonza´lez, Ce´sar A. Hidalgo & Albert-La´szlo´ Baraba´si in NATURE Vol 453|5 June 2008|doi:10.1038/nature06958

Barabasi is the director of Notre Dame's Center for Complex Network Research.

Here is the abstract:

- "Despite their importance for urban planning1, traffic forecasting2 and the spread of biological3–5 and mobile viruses6, our understanding of the basic laws governing human motion remains limited owing to the lack of tools to monitor the time-resolved location of individuals. Here we study the trajectory of 100,000 anonymized mobile phone users whose position is tracked for a six-month period. We find that, in contrast with the random trajectories predictedby the prevailing Le´vy flight and random walk models7, human trajectories show a high degree of temporal and spatial regularity, each individual being characterized by a time independent characteristic travel distance and a significant probability to return to a few highly frequented locations. After correcting for differences in travel distances and the inherent anisotropy of each trajectory, the individual travel patterns collapse into a single spatial probability distribution, indicating that, despite the diversity of their travel history, humans follow simple reproducible patterns. This inherent similarity in travel patterns could impact all phenomena driven by human mobility, from epidemic prevention to emergency response, urban planning and agent-based modelling."

This sort of research sets the stage for people to develop applications to discover and anayze, and predict everyone's daily patterns. In many urban areas, security web-cams are on buildings and street corners. Data collected from webcams, combined with data from GPS enabled cell phones running location-aware programs, might be prove to be useful for emergency planning, homeland security, and crime prevention.

The same mix of data might also prove to be useful to marketers. Can you imagine walking around the city, trying to dodge all of the virtual pop-up and banner ads that might worm out of cyberspace into the streets in the form of electronic billboards, and at the same time, pushed to your mobile device?!

What if all of this information got into the "wrong" hands. Privacy and security issues, in my opinion, have not been carefully considered at this point.

Not yet multi-touch: HP's Next Generation TouchSmart PC - and my thoughts about smudges and germs

The Next Generation HP TouchSmart PC

The new TouchSmart PC's, which can display high definition content, aren't out on the market yet. HP is taking pre-orders.

NextWindow, a company that produces large interactive touch-screen displays, including those with multi-touch capabilities, was responsible for the touch-screen embedded in the TouchSmart PC.

Although some people can't wrap their minds around the idea of interacting with a touch screen because they don't like germs and smudges, or they are locked into a point-and-click mindset, I think they are worth considering. Developers are working on new ways of interacting with information, and I'm sure that we will all be pleasantly surprised with what holds for the future.

Touch screen displays more easily support collaboration between two people, and more, when the display is large.

Some people aren't too sure about getting their hands on a touch screen. Why?

They are worried about smudges and the spread of germs.

Even little children know that it is important to wash their hands frequently throughout the day to prevent the spread of germs. At school, they are not permitted to eat or drink when they use computers, which is a good habit to develop at an early age.

The same hands that are smudging up our non-touch screen displays on our PC's and laptops are also leaving lots of germs, and even food particles, on computer mice and keyboards. I'm talking about adults who should know better than to eat and drink at the computer and neglect to wash their hands!

I'm not too worried about it. If you care about health and reducing the spread of germs, most likely you make sure your laptop or PC is regularly disinfected, and you make sure your hands are clean, just like your mama taught you.

Related:

HP Redefines Home Computing, Putting the Digital Lifestyle at People's Fingertips with New TouchSmart PC's

HP Introduces World's First Affordable Color-critical Display

Gizmodo: HP TouchSmart iq506 Brings New Interface, Bigger Screen, and Intel Processor

(cross posted)

Monday, June 9, 2008

Verizon, can you hear me now? I want an iPhone! Live from WWDC

Verizon, can you hear me now?!!!! I want an iPhone.

For more pictures and info about iPhone apps, take a look at Ryan Block's engadget post:

Steve Jobs Keynote Live from WWDC 2008

Sunday, June 8, 2008

PHUN- Social Interactive Physics Sandbox

UPDATE 4/24/11: Phun is commercially available and now optimized for use on the newer multi-touch, multi-user SMARTboards. It is now known as Algodoo.

Enjoy the video and the music!

For more information, see "Engaged Learning and Social Physics: PHUN, an Interactive 2D Physics Sandbox"

Friday, June 6, 2008

Emerging Interactions: Organic User Interfaces, Force Interaction, Online 3D Tag Galaxy

INTERESTING INTERFACE INTERACTIONS

Steven Wood's Online 3D Tag Galaxy, using Flickr and Papervision 3D:

For more information, see Emerging Interactive Technologies, Emerging Interactions, and Emerging Form Factors

Dr. Roel Vertegaal , Organic User Interfaces, and the Human Media Lab Team at Queen's University are involved in several interesting emerging technology projects:

Organic Interface: New computers change shape, respond to touch..

“We want to reduce the computer’s stranglehold on cognitive processing by embedding it and making it work more and more like the natural environment,” says Dr. Vertegaal. “It is too much of a technological device now, and we haven’t had the technology to truly integrate a high-resolution display in artifacts that have organic shapes: curved, flexible and textile, like your coffee mug."

Interactive Blogjects:

Paper Windows Prototype Video:

A fantasy demo of this concept, by an alumnus of Queen's University:

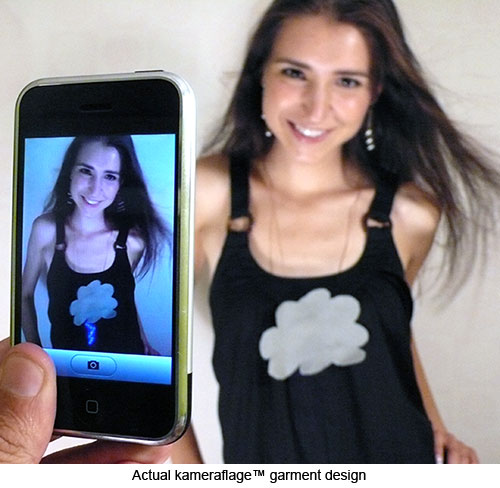

Kamerflage tm: Augmented Reality with a camera phone, created by the same alumnus:

"Context-Sensitive Display Technology. kameraflageTM technology encodes a layer of information that can only be viewed by the human eye when looking at an image of the scene taken by a camera."

Microsoft Research's Force-Sensing Screen (pdf)

(James Scott, Lorna Brown, Mike Molloy)

BBC Article: "May the force be with you"

(Darren Walters)

"The technology allows users to apply force to their portable device in order to carry out on-screen actions, such as flip a page in a document or switching between applications."

Sunday, June 1, 2008

Dance Interaction, Gestures, Patents, and Technology: Doug Fox, of Kinetic Interface, poses some good questions...

Doug Fox, of Kinetic Interface, is the author of the blog of the same name, which is part of the GreatDance website.

From looking over Doug's posts, it is clear that he has spent quite a bit of time pondering issues related to dance and technology interaction. According to Doug, Steve Jobs/Apple is working with the Ohad Naharin, a choreographer. He points out that "choreographers are essentially rapid-prototypers who can create the basics of new movement vocabularies in a few hours."

Reflecting on my previous dance training, I think Doug is on to something.

Here are some excerpts from the Kinetic Interface blog:

"The Kinetic Interface blog on Great Dance starts with the premise that by focusing on the body and movement we can better understand, engage with, and contribute to many of the technological and scientific changes that are reshaping our daily lives."

Gesture Patents Point Way to Full-Body Interfaces

"To ask a bit of an improbable question, will dancers be prohibited from certain movement sequences because they are protected by the US Patent and Trademark Office? This is not likely to happen. But what might happen is that the most natural of human gestures and movements may eventually become proprietary instruments of interface designers.

On another front, I would like to create a video contest where dancers were invited to create their own series of gestures and movements that were intended to control new PC and mobile interfaces. I think that dancers would come-up with some highly innovative approaches that had not previously been considered."

Creating Dance for Developers of User Interfaces"Say, you were creating a dance piece for a geeky audience of software developers. How would you go about creating and structuring a dance so that developers could relate to it as if it were, in some sense, the user interface for a software program?.....I would find it very interesting to speak with choreographers who have pondered some variation of my questions connecting dance to user interface design. Or who have created dances for non traditional dance audiences."

For related information about interfaces, Doug Fox recommends reading Alex Iskold's article, The Rise of Contextual Interfaces on ReadWriteWeb. Be sure to read the comments to Iskold's article, if you have the time.

PART TWO

I studied dance formally when I was young, and even through my college years at the University of Michigan. (This was a long time ago- Madonna was in one of my classes!)

Despite all of my dance training, I really did not think about the way dance interaction relates to technology until recently, when I came across Doug Fox's Kinetic Interface blog and the Great Dance website. Not long after that, I had the opportunity to see a performance of Exquisite Interaction at the Visualization in the World symposium. The performance was part of the Dance.Draw project, a collaboration between the Dance and Software and Information Systems (HCI) departments at UNC-Charlotte.

![[ExquisiteInteraction.jpg]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEjHgdHs-M0M_fXtuka0iTsRxZJbTpjX9PCxGyVqCEaXZABt3Vb2lr93M3JNQt36oq0UdwAACsf2xtAU2b08sSzuFelBziegQz3XUcrT5kNVnKn8M3vAERU_Mvw1PXN1pXDR1G_f6xDaTIA/s1600/ExquisiteInteraction.jpg)

- GestureTek, a company that reportedly developed patents for gestures as early as 1991.

- Jeroen Arendsen's A Nice Gesture "Stories of gestures and sign language about perception, semiotics, and technology". Jeroen is Ph.D. student and also an interaction designer and usability specialist.

- Merce Cunningham, a famous choreographer, collaborated with software developers to create the DanceForms choreography software. (Also see the Character Motion website.) "DanceForms 1.0 inspires you to visualize and chronicle dance steps or entire routines in an easy-to-use 3D environment. For choreography, interdisciplinary arts and dance technology applications."

Merce Cunningham Dance Company

- PBS American Masters Documentary on Merce Cunningham, and also John Cage, the musician he collaborated with from 1942-1992. Both were connected with R. Buckminster Fuller, the guy who revolutionized the field of engineering.

- Cycling74 is the software company behind Max/MSP/Jitter and other cool stuff -Cycling74 products are used by musicians, multi-media artists, and dancers. Academic/student discounts are available.